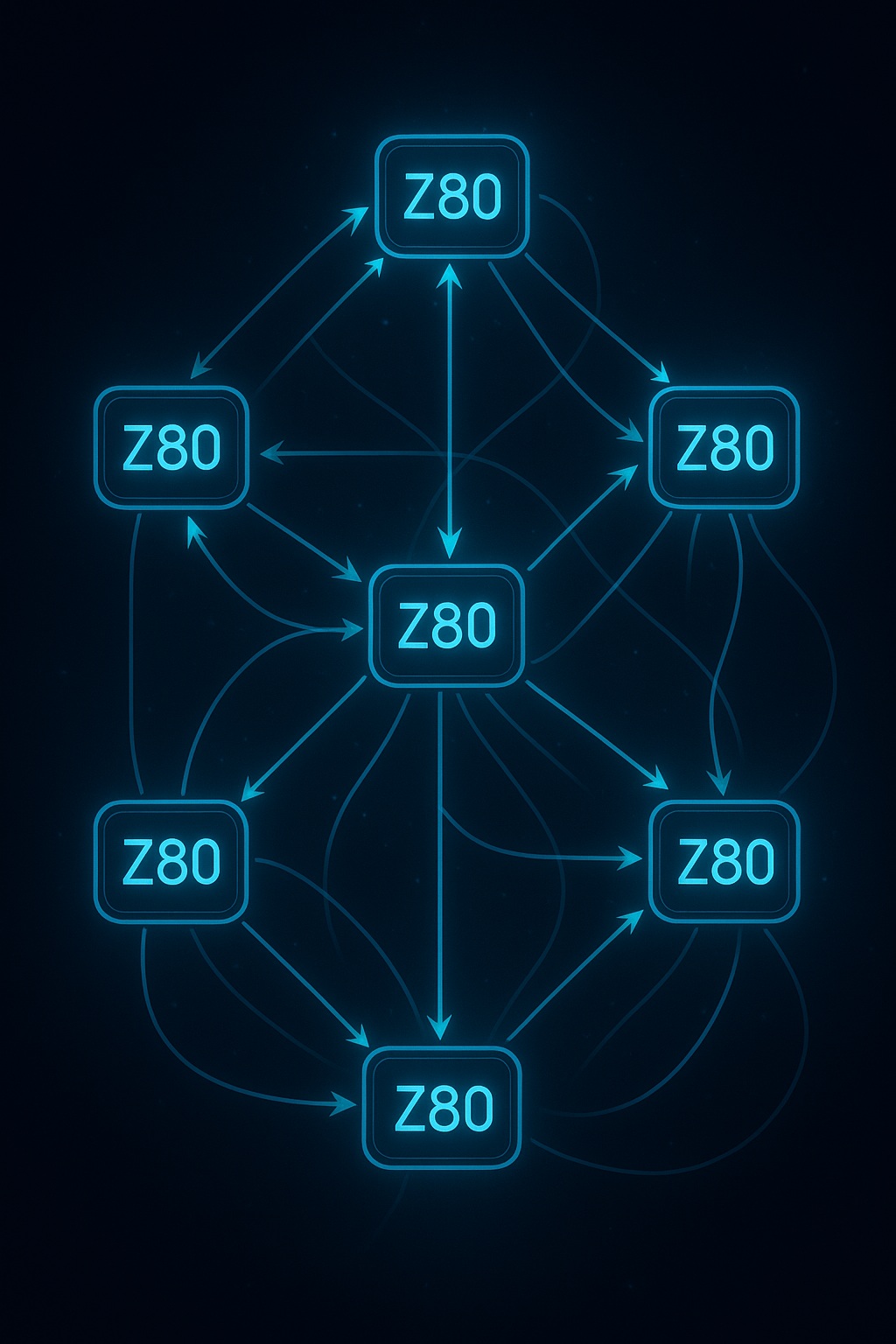

Distributed Z80 Parallel Execution Engine with Adaptive Instruction Stream Dispatcher

This project explores the idea of building a massively parallel compute system composed of multiple Zilog Z80 processors, coordinated by an intelligent front-end capable of analyzing and distributing the instruction stream across a scalable array of physical CPUs.

The core concept is an adaptive instruction dispatcher — implemented using a neural network, an expert system, or a hybrid machine-learning approach — which observes the incoming Z80 instruction sequence, identifies patterns, routines, and potential sub-tasks, and dynamically partitions the workload across many Z80 cores. The dispatcher continuously learns from execution results, gradually improving its scheduling strategy and developing an increasingly efficient parallelization model.

Although the Z80 is a simple and historically dated microprocessor, its deterministic behavior, well-understood instruction set, and extremely low hardware requirements make it ideal for experimental large-scale parallel computation research. By assembling a farm of Z80 CPUs (from a handful to hundreds), the system can be scaled according to available resources while enabling exploration of:

- dynamic instruction-level parallelization

- pattern recognition in execution traces

- adaptive scheduling and load balancing

- speculative task partitioning

- hardware-driven experimentation in low-cost conditions

The goal is not raw performance, but to investigate how far an intelligent dispatcher can push parallel execution of inherently sequential architectures, and to evaluate whether emergent scheduling strategies can achieve meaningful speedups through large-scale physical replication of simple processors.

Architecture-Level Z80 Emulation

In this project, each Z80 processor is emulated not at the gate level, nor merely at the instruction level, but at an intermediate architecture-level abstraction: the level at which the processor is defined by its registers, addressing modes, execution model, micro-operations, and overall Von Neumann–style architecture. The goal is to capture the structural and behavioral semantics of the Z80 without simulating individual transistors.

The intelligent dispatcher — whether implemented as a neural network or an expert system — also operates on this architecture-level representation, analyzing register flows, memory access patterns, and execution traces to identify parallelizable regions of the instruction stream. This ensures that the learning system interacts with the execution model at the same conceptual level as the emulated CPUs, enabling coherent analysis, scheduling, and adaptive parallelization.